solaris部署hadoop集群跑wordcount报错

solaris部署hadoop集群跑wordcount报错,

信息如下:

[admin@4bf635fa-5f3e-4b47-b42d-7558a6f0bbff ~]$ hadoop jar /opt/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.1.jar wordcount /input /output

15/08/20 00:48:09 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

15/08/20 00:48:10 INFO client.RMProxy: Connecting to ResourceManager at master/192.168.77.27:8032

15/08/20 00:48:11 INFO input.FileInputFormat: Total input paths to process : 3

15/08/20 00:48:11 INFO mapreduce.JobSubmitter: number of splits:3

15/08/20 00:48:11 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1439973008617_0002

15/08/20 00:48:13 INFO impl.YarnClientImpl: Submitted application application_1439973008617_0002

15/08/20 00:48:13 INFO mapreduce.Job: The url to track the job: http://master:8088/proxy/application_1439973008617_0002/

15/08/20 00:48:13 INFO mapreduce.Job: Running job: job_1439973008617_0002

15/08/20 00:48:35 INFO mapreduce.Job: Job job_1439973008617_0002 running in uber mode : false

15/08/20 00:48:35 INFO mapreduce.Job: map 0% reduce 0%

15/08/20 00:48:35 INFO mapreduce.Job: Job job_1439973008617_0002 failed with state FAILED due to: Application application_1439973008617_0002 failed 2 times due to Error launching appattempt_1439973008617_0002_000002. Got exception: java.net.ConnectException: Call From localhost/127.0.0.1 to localhost:37524 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:422)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:792)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:732)

at org.apache.hadoop.ipc.Client.call(Client.java:1480)

at org.apache.hadoop.ipc.Client.call(Client.java:1407)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:229)

at com.sun.proxy.$Proxy83.startContainers(Unknown Source)

at org.apache.hadoop.yarn.api.impl.pb.client.ContainerManagementProtocolPBClientImpl.startContainers(ContainerManagementProtocolPBClientImpl.java:96)

at org.apache.hadoop.yarn.server.resourcemanager.amlauncher.AMLauncher.launch(AMLauncher.java:119)

at org.apache.hadoop.yarn.server.resourcemanager.amlauncher.AMLauncher.run(AMLauncher.java:254)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:531)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:495)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:609)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:707)

at org.apache.hadoop.ipc.Client$Connection.access$2800(Client.java:370)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1529)

at org.apache.hadoop.ipc.Client.call(Client.java:1446)

... 9 more

. Failing the application.

15/08/20 00:48:35 INFO mapreduce.Job: Counters: 0

hadoop dfsadmin -report 统计信息如下:

[admin@4bf635fa-5f3e-4b47-b42d-7558a6f0bbff ~]$ hadoop dfsadmin -report DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

15/08/20 00:49:35 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Configured Capacity: 344033951744 (320.41 GB)

Present Capacity: 342854029952 (319.31 GB)

DFS Remaining: 342853630464 (319.31 GB)

DFS Used: 399488 (390.13 KB)

DFS Used%: 0.00%

Under replicated blocks: 7

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

-------------------------------------------------

Live datanodes (1):

Name: 192.168.77.28:50010 (slave1)

Hostname: localhost

Decommission Status : Normal

Configured Capacity: 344033951744 (320.41 GB)

DFS Used: 399488 (390.13 KB)

Non DFS Used: 1179921792 (1.10 GB)

DFS Remaining: 342853630464 (319.31 GB)

DFS Used%: 0.00%

DFS Remaining%: 99.66%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu Aug 20 00:49:36 UTC 2015

192.168.77.27(master)跑ResourceManager和NameNode,192.168.77.28(slave)跑DataNode和NodeManger,貌似hadoop把DataNode和NodeManger的hostname都认成localhost了,所以才找不到报错了。

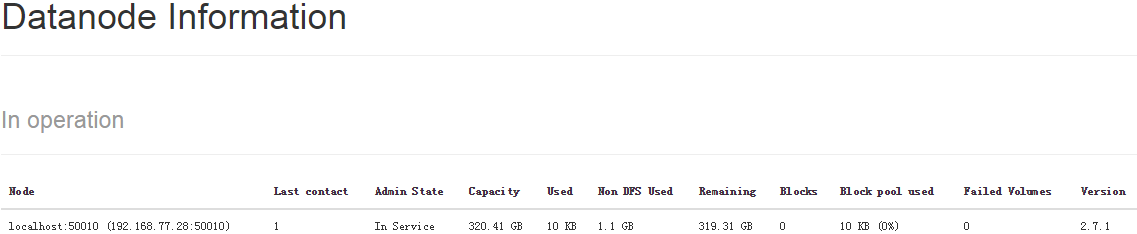

DataNode信息:

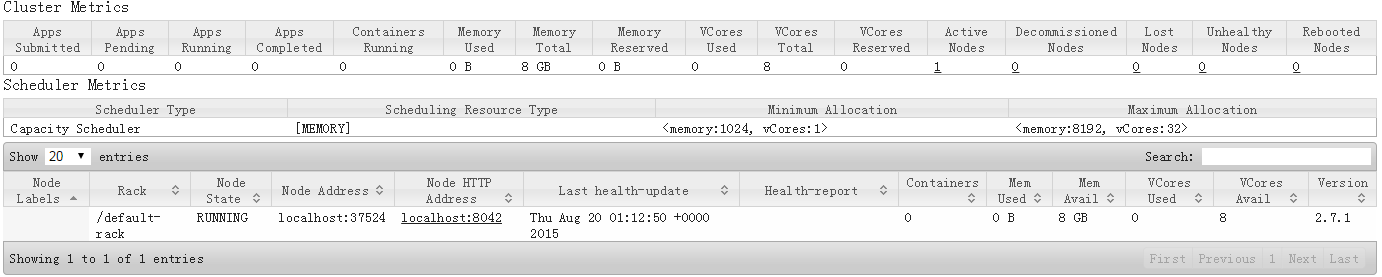

NodeManger信息:

已解决