python爬虫问题

1

[已关闭问题]

关闭于 2016-12-06 08:40

最近学习python爬虫,表单提交。登陆知乎,51CTO都失败了,但是找不到改进的方法,解决不成功的思路。实际登陆中,有那些改进的思路,解决不成功的调试的办法。

比如登陆51CTO:

用chrome先登陆,先登陆一遍,构造header,查看登陆表单隐藏元素,构造params。

#coding=utf-8 import requests import re from bs4 import BeautifulSoup header={ 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8', 'Accept-Encoding': 'gzip, deflate', 'Accept-Language': 'en-US,en;q=0.8,zh-CN;q=0.6,zh;q=0.4', 'Connection': 'keep-alive', 'Content-Type': 'application/x-www-form-urlencoded', 'Upgrade-Insecure-Requests': '1', 'Host':'home.51cto.com', 'User-Agent':'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36' } username=raw_input("input name:") password=raw_input("input password:") index_url='http://home.51cto.com/index' session=requests.Session() index=session.get(index_url,headers=header) reg=r'type="hidden" name="_csrf" value="(.*)">' pattern=re.compile(reg) result=pattern.findall(index.content) print result _csrf=result #正则找到表单的_csrf params={ '_csrf':_csrf, 'LoginForm[username]':username, 'LoginForm[password]':password, 'LoginForm[rememberMe]':'1' } post_url='http://home.51cto.com/index' req=session.post(post_url,params,headers=header) print req.content print req.cookies.get_dict()

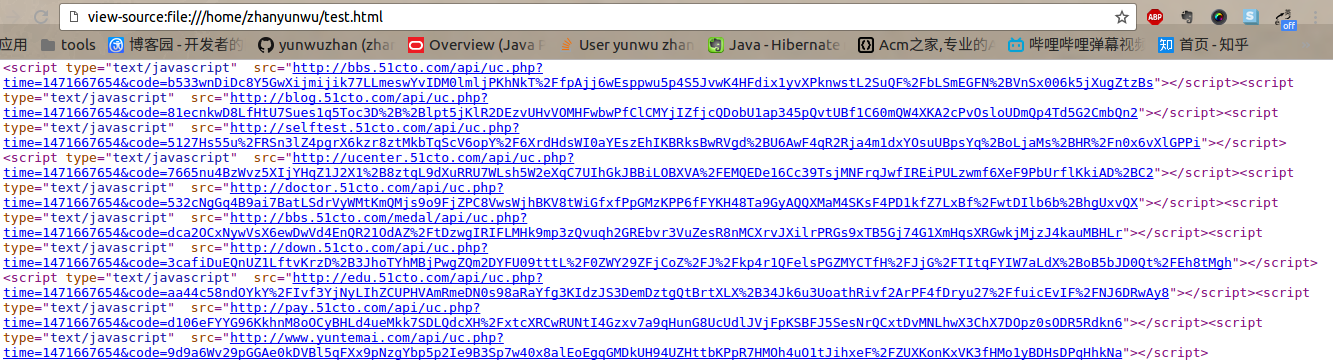

req.cookies是有值的,但是req.content是返回的下图,网页上正常是到用户中心,这是被反了吗???怎么改进????

所有回答(1)

0

很高的样子