求助于关于Scrapy的ImagesPipeline管道无法进行图片的储存

0

爬虫文件

class Dm5Spider(scrapy.Spider):

name = 'dm5'

# allowed_domains = ['www.xxx.com']

start_urls = ['http://www.dm5.com/manhua-list/']

url = 'http://www.dm5.com/manhua-list-p{}/'

def parse(self, response):

li_list = response.xpath('/html/body/section[2]/div/ul/li')

for li in li_list:

comic_title = li.xpath('./div/div[1]/h2/a/text()').extract_first()

comic_url = 'http://www.dm5.com/' + li.xpath('./div/div[1]/h2/a/@href').extract_first()

item = Dm5ProItem()

item['comic_title'] = comic_title

yield scrapy.Request(comic_url, callback=self.chapter_parse, meta={'item': item})

def chapter_parse(self, response):

li_list = response.xpath('//*[@id="detail-list-select-1"]/li')

for li in li_list:

chapter_name = li.xpath('./a/text()').extract_first()

chapter_url = 'http://www.dm5.com/' + li.xpath('./a/@href').extract_first()

item = response.meta['item']

item['chapter_name'] = chapter_name.strip()

yield scrapy.Request(chapter_url, callback=self.content_parse, meta={'item': item})

def content_parse(self, response):

page_text = response.text

cid = re.findall('var DM5_CID=(.+?);', page_text)[0].strip()

page_count = re.findall('var DM5_PAGEPCOUNT =(.+?);', page_text)[0].strip()

_mid = re.findall('var DM5_MID=(.+?);', page_text)[0].strip()

_dt = re.findall('var DM5_VIEWSIGN_DT="(.+?)";', page_text)[0].strip()

_sign = re.findall('var DM5_VIEWSIGN="(.*?)";', page_text)[0].strip()

page = 1

while page < int(page_count):

url = f'{response.url}chapterfun.ashx?cid={cid}&page=1&key=&language=1>k=6&_cid={cid}&_mid={_mid}&_dt={_dt}&_sign={_sign}'

yield scrapy.Request(url,callback=self.content_parse_2,meta={'item':response.meta['item']})

def content_parse_2(self, response):

js_code = response.text

img_urls = execjs.eval(js_code)

img_url = img_urls[0]

item = response.meta['item']

item['src'] = img_url

img_name = wurl.parse_url(img_url).path.split('/')[-1]

item['img_name'] = img_name

yield item

items

class Dm5ProItem(scrapy.Item):

# define the fields for your item here like:

comic_title = scrapy.Field()

chapter_name = scrapy.Field()

src = scrapy.Field()

img_name = scrapy.Field()

settings

import os

BOT_NAME = 'dm5pro'

SPIDER_MODULES = ['dm5pro.spiders']

NEWSPIDER_MODULE = 'dm5pro.spiders'

LOG_LEVEL = 'ERROR'

DEFAULT_REQUEST_HEADERS = {

'user-agent' : "Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US) AppleWebKit/534.20 (KHTML, like Gecko) Chrome/11.0.672.2 Safari/534.20",

'referer': 'http://www.dm5.com/'

}

IMAGES_STORE = os.path.join(os.path.dirname(os.path.dirname(__file__)), 'images')

ITEM_PIPELINES = {

'dm5pro.pipelines.Dm5ProPipeline': 300,

}

ROBOTSTXT_OBEY = False

pipelines

class Dm5ProPipeline(ImagesPipeline):

def get_media_requests(self, item, info):

print(f'{item["src"]}正在下载~~')

yield scrapy.Request(item['src'], meta={'item': item})

def file_path(self, request, response=None, info=None):

comic_title = request.meta['item']['comic_title'] #获取动漫名字

image_store = settings.IMAGES_STORE # 获取settings中的路径

comic_title_path = os.path.join(image_store, comic_title) # 对路径跟动漫名字进行拼接

chapter_name = request.meta['item']['chapter_name'] # 获取章节名称

chapter_name_path = os.path.join(comic_title_path, chapter_name) # 对路径跟章节名称进行拼接

if not os.path.exists(chapter_name_path): # 如果没有这个文件夹

os.makedirs(chapter_name_path) # 对这个文件夹进行创建

# image_name = path.replace("full/", "")

img_name = request.meta['item']['img_name'] # 获取当前图片名称

image_path = os.path.join(chapter_name_path, img_name) #对路径跟图片名称进行拼接

print(image_path)

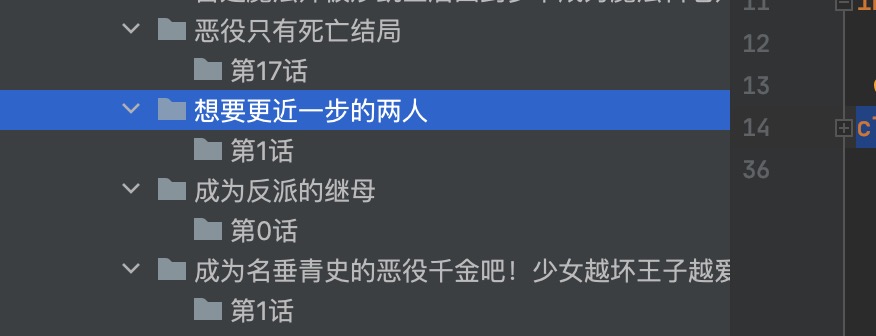

# PycharmProjects/爬虫练手/2.100个简单练手的网站/dm5动漫/dm5pro/images/成为反派的继母/第0话/1_1732.jpg

return image_path # 将路径返回

def item_completed(self, results, item, info):

return item

但是创建的文件夹已经存在,但是图片并没有获取到