用python爬取豆瓣电影信息时遇到的问题

0

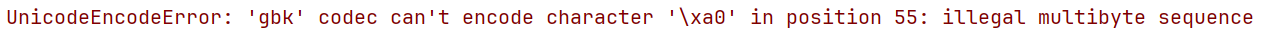

[待解决问题]

这是源码

import requests

from bs4 import BeautifulSoup

import time

url = "https://movie.douban.com/top250?start=0&filter="

headers = {

"User-Agent": """Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/122.0.0.0 Safari/537.36"""

}

urls = ["https://movie.douban.com/top250?start=%d&filter=" % i

for i in range(0, 226, 25)

]

for url in urls:

resp = requests.get(url, headers=headers)

soup = BeautifulSoup(resp.text, "html.parser")

print(resp.status_code)

print("当前网页:", url, resp.status_code)

for li in soup.find_all("li"):

items = li.find("div", class_="item")

if not items:

continue

Tops = items.find("div", class_="pic").find("em").get_text() # 排名

imgs = li.find("img")

src = imgs["src"] # 图片地址

Titles = items.find("div", class_="hd").find("span").get_text() # 电影名

Directors = items.find("div", class_="bd").find("p").get_text().strip().replace("\n", "<br/>").replace('',

' ').replace(

"<br/> ", "\t") # 导演

Score = items.find("div", class_="star").find("span", class_="rating_num").get_text() # 评分

spans = items.find("div", class_="star").find_all("span")

inq = items.find("p", class_="quote") # 简介

if not items.find("p", class_="quote"):

continue

print(Tops, Titles, src, Directors, Score, spans[3].text, inq.find("span").get_text())

time.sleep(5) # 防止爬取太快ip被封 根据网页的robots.txt文件

在我刚写好的时候没有问题,就在我想封装成一些函数运行之后出现了这个错误,我把函数注释以后这个问题还时有,但是在我复制这些代码在桌面运行的时候却可以爬取到。请问我该怎么解决