kubeadm 创建 k8s 高可用集群遇到问题:control plane 的 pod 无法正常启动

0

通过下面的 kubeadm 命令创建 kubernetes 高可用集群

kubeadm init \

--control-plane-endpoint "kube-api:6443" --upload-certs \

--pod-network-cidr=10.0.0.0/8

在创建过程中出现 kube-apiserver 健康检查失败的错误

[control-plane-check] kube-apiserver is not healthy after 4m0.004742348s

A control plane component may have crashed or exited when started by the container runtime.

To troubleshoot, list all containers using your preferred container runtimes CLI.

Here is one example how you may list all running Kubernetes containers by using crictl:

- 'crictl --runtime-endpoint unix:///var/run/containerd/containerd.sock ps -a | grep kube | grep -v pause'

Once you have found the failing container, you can inspect its logs with:

- 'crictl --runtime-endpoint unix:///var/run/containerd/containerd.sock logs CONTAINERID'

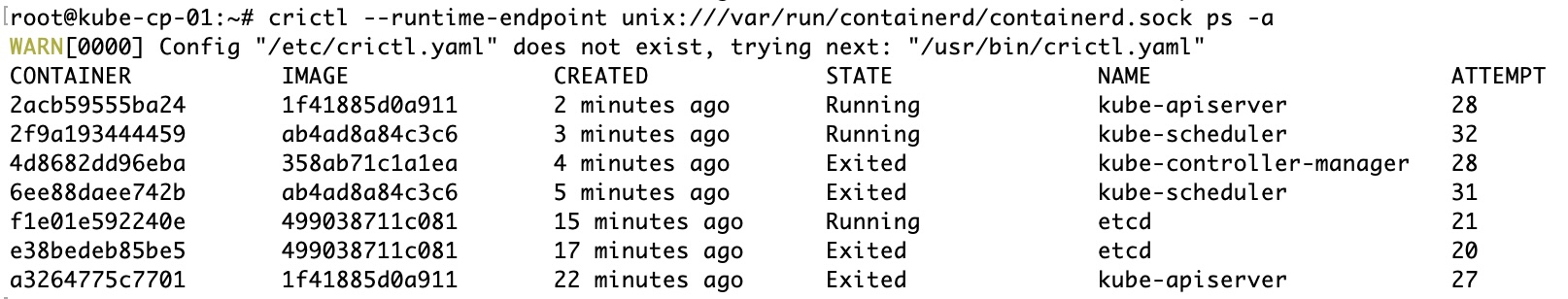

用下面的命令查看 pod 允许状态

crictl --runtime-endpoint unix:///var/run/containerd/containerd.sock ps -a

发现 control plane 的几个组件的 pod 都无法正常启动

请问如何解决这个问题?

最佳答案

0

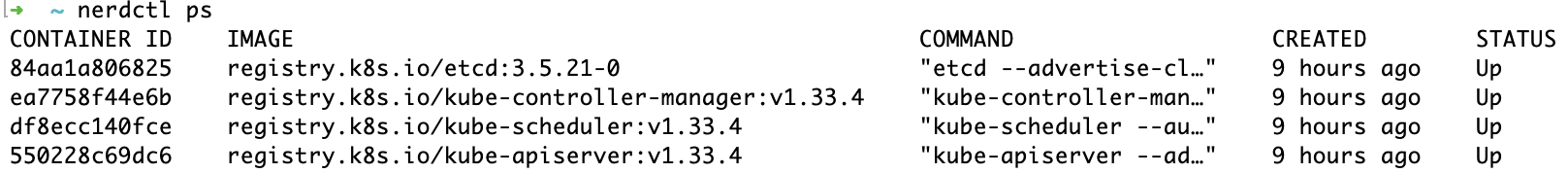

问题与 cgroup driver 有关,在 /etc/containerd/config.toml 中将 SystemdCgroup = flase 改为 SystemdCgroup = true,用 kubeadm init 命令重新创建集群,虽然还是会出现同样的错误,但 control plane 的4个 pod 可以正常启动

pod 成功启动后还是会出现同样的错误,与阿里云负载均衡有关,负载均衡中的服务器不能通过负载均衡与自己进行通信,详见 https://q.cnblogs.com/q/124859

在 /etc/hosts 中将 control-plane-endpoint 解析到本机地址可解决

127.0.0.1 kube-api

https://github.com/kubernetes/kubernetes/issues/125275

– dudu 5个月前https://github.com/kubernetes/kubeadm/issues/3069

– dudu 5个月前https://github.com/containerd/containerd/issues/4857

– dudu 5个月前